Contents

Introduction

This setup is an attempt to reuse a couple of OxygenRAID400 (Infortrend A16U-G1A3) Systems that have proven to be too unstable for normal use. In particular, the controllers generally have problems to recognize disk problems, and will occasionally become extremely slow or completely unresponsive to SCSI requests, or even freeze completely. Each of these will cause a service outage, which is not acceptable because it may happen quite frequently (once a week!) if the devices are actually used.

For this reason it was decided to connect two controllers (raid-iole & raid-iolaos ) to each of two hosts (iole1 & iole2). And use Linux's md driver in RAID1 mode to make failure of a complete controller tolerable without service interruptions.

Tests performed

Controller shutdown of raid-iole, followed by a controller reset

- no service interruption

- mdmonitor alert emails received from both hosts

- logsurfer alerts received for both hosts

- after controller reset, MD mirrors could be resynced without reboot

- one physical drive did not survive the reset - it was no longer accessible afterwards, although there seemed to be no problem with it immediately before

SCSI transfer rates were reduced to < 40 MB/s on target 1 of this controller on both iole1&2

- no big deal since it's not much faster anyway, but requires a scheduled 5 minutes downtime (host reboot is sufficient, no controller reset required)

- during iole1 reboot, SCSI BIOS reported bad SMART status of ALL three drives on raid-iole (this state requires a keypress on the console to continue the boot, or a reset, to get the host up)

- afterwards, on iole2 (which was still syncing the mirrors), smartctl gave a bad smart status

for all raid-iole targets once and only once:

[iole2] ~ # /usr/sbin/smartctl -H /dev/sda smartctl version 5.1-11 Copyright (C) 2002-3 Bruce Allen Home page is http://smartmontools.sourceforge.net/ SMART Sense: FAILURE PREDICTION THRESHOLD EXCEEDED [asc=5d,ascq=0] [iole2] ~ # /usr/sbin/smartctl -H /dev/sdb smartctl version 5.1-11 Copyright (C) 2002-3 Bruce Allen Home page is http://smartmontools.sourceforge.net/ SMART Sense: FAILURE PREDICTION THRESHOLD EXCEEDED [asc=5d,ascq=0] [iole2] ~ # /usr/sbin/smartctl -H /dev/sdc smartctl version 5.1-11 Copyright (C) 2002-3 Bruce Allen Home page is http://smartmontools.sourceforge.net/ SMART Sense: FAILURE PREDICTION THRESHOLD EXCEEDED [asc=5d,ascq=0]

All following queries would return status OK. This is consistent with the observation that subsequential reboots of the host systems pass without the need to acknowledge the SMART status.

- afterwards, on iole2 (which was still syncing the mirrors), smartctl gave a bad smart status

- On iole2, md0 (/boot) was deliberately made dyrty by touching a file in /boot while raid-iole was off. The following resync was successful, and the filesystems on both sda and sdd were ok, but GRUB failed to load its stage2. As a remedy, iole2 was booted via PXE (using kernel and initrd from iole1) and GRUB re-installed into the master boot record of sda (see below).

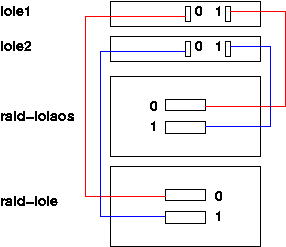

Cabling

This is the rear view of the SCSI cabling:

RAID array setup and mapping

RAID |

drives |

LD |

Partition |

SCSI Channel |

ID |

Host |

Adapter |

device |

raid-iole |

1-4 |

0 |

0 |

0 |

1 |

iole1 |

0 |

sda |

|

|

|

1 |

|

2 |

|

|

sdb |

|

5-8 |

1 |

- |

|

3 |

|

|

sdc |

|

9-12 |

2 |

0 |

1 |

1 |

iole2 |

0 |

sda |

|

|

|

1 |

|

2 |

|

|

sdb |

|

13-16 |

3 |

- |

|

3 |

|

|

sdc |

raid-iolaos |

1-4 |

0 |

0 |

0 |

1 |

iole1 |

1 |

sdd |

|

|

|

1 |

|

2 |

|

|

sde |

|

5-8 |

1 |

- |

|

3 |

|

|

sdf |

|

9-12 |

2 |

0 |

1 |

1 |

iole2 |

1 |

sdd |

|

|

|

1 |

|

2 |

|

|

sde |

|

13-16 |

3 |

- |

|

3 |

|

|

sdf |

kickstart partitioning

clearpart --drives=sda,sdd --initlabel part raid.01 --size 256 --ondisk sda part raid.03 --size 1024 --ondisk sda part raid.05 --size 2048 --ondisk sda part raid.07 --size 10240 --ondisk sda part raid.09 --size 1 --ondisk sda --grow part raid.02 --size 256 --ondisk sdd part raid.04 --size 1024 --ondisk sdd part raid.06 --size 2048 --ondisk sdd part raid.08 --size 10240 --ondisk sdd part raid.10 --size 1 --ondisk sdd --grow raid /boot --level=1 --device=md0 --fstype ext2 raid.01 raid.02 raid /afs_cache --level=1 --device=md1 --fstype ext3 raid.03 raid.04 raid swap --level=1 --device=md2 --fstype swap raid.05 raid.06 raid / --level=1 --device=md3 --fstype ext3 raid.07 raid.08 raid /usr1 --level=1 --device=md4 --fstype ext3 raid.09 raid.10

This should be safe to reuse if anything ever has to be reinstalled, since there are no data partitions on any of these block devices. To play safe, add the following to the cks3 files:

$cfg{PREINSTALL_ADD} = "sleep 86400";and rerun CKS3. Then check /proc/partitions on virtual console #2: You should see 6 disks, and sda and sdd should be the small ones. It is then safe to "killall -TERM sleep" to continue the installation.

adding the MD devices for the vice partitions

First, created primary partitions spanning the whole device on sdb, sdc, sde, sdf. This was done with fdisk. Note the type must be fd (Linux RAID Autodetect). Do not change the partition type while the corresponding md device is active.

Then created the devices:

mdadm --create /dev/md5 -l 1 --raid-devices=2 /dev/sdb1 /dev/sde1 mdadm --create /dev/md6 -l 1 --raid-devices=2 /dev/sdc1 /dev/sdf1

Initialization takes very long! When all four devices were initialized at the same time, it took > 24 hours, even though the max bandwidth was set to 50000 in /proc/sys/dev/raid/speed_limit_max. The limiting factor seemed to be the writes ot raid-iolaos.

installing GRUB on sdd

While kickstart will happily put /boot onto /dev/md0, it will install GRUB in the master boot record of /dev/sda only. Hence when raid-iole is not operational, neither iole1 nor iole2 could boot.

To remedy this, GRUB was installed into the master boot record of sdd on both systems manually. After starting grub (as root):

grub > device (hd0) /dev/sdd grub > root (hd0,0) grub > setup (hd0) grub > quit

This of course assumes that /boot is a separate partition. The device command accounts for the fact that /dev/hdd will be the first BIOS drive if raid-iole is unavailable.

Some more info here.

This procedure has to be repeated when md0 had to be resynced after a raid-iolaos problem (sync to sdd).

re-installing GRUB

This will be necessary if md0 had to be resynced after a raid-iole problem (sync to sda). Run grub (as root), and then

grub > root (hd0,0) grub > setup (hd0) grub > quit

This is just like what's described above, but for the primary master boot record. If you do this, you probably want to do it for sdd as well.

mdadm.conf

The file /etc/mdadm.conf was created by manually entering the DEVICE lines, and stripping the redundant "level=raid1" from the output of mdadm --detail --scan . On iole1, it looks like this:

DEVICE /dev/sda[1-6] /dev/sdd[1-6]

DEVICE /dev/sd[bc]1 /dev/sd[ef]1

ARRAY /dev/md0 num-devices=2 UUID=c8c1f4cc:02c4e0db:5a1db3e2:38677d91

devices=/dev/sda1,/dev/sdd1

ARRAY /dev/md1 num-devices=2 UUID=7c2f67a9:2d720999:19391348:942bdb5a

devices=/dev/sda5,/dev/sdd5

ARRAY /dev/md2 num-devices=2 UUID=38e72de0:3e5babee:ff53494b:0ca93c4f

devices=/dev/sda3,/dev/sdd3

ARRAY /dev/md3 num-devices=2 UUID=1c6cf5e1:9d364005:4ff3e20c:b4a78ba9

devices=/dev/sda2,/dev/sdd2

ARRAY /dev/md4 num-devices=2 UUID=a13d764a:2691787e:10de06a8:94c92891

devices=/dev/sda6,/dev/sdd6

ARRAY /dev/md5 num-devices=2 UUID=3723934c:d4dcb812:80e48df0:47261873

devices=/dev/sdb1,/dev/sde1

ARRAY /dev/md6 num-devices=2 UUID=d709cc37:c2e88eb0:3eed3a0b:86da8b2d

devices=/dev/sdc1,/dev/sdf1

MAILADDR mail-alert@ifh.deThe MAILADDR value (and only that) is maintained by the raid feature. Notice this file can not be copied from one host the other even if the setup is identical. That's because the UUIDs are (and should be!) different.

maintenance

everything ok ?

This is what /proc/mdstat looks like if all is fine:

Personalities : [raid1]

read_ahead 1024 sectors

Event: 7

md0 : active raid1 sdd1[1] sda1[0]

264960 blocks [2/2] [UU]

md3 : active raid1 sdd2[1] sda2[0]

10482304 blocks [2/2] [UU]

md2 : active raid1 sdd3[1] sda3[0]

2096384 blocks [2/2] [UU]

md1 : active raid1 sdd5[1] sda5[0]

1052160 blocks [2/2] [UU]

md4 : active raid1 sdd6[1] sda6[0]

88501952 blocks [2/2] [UU]

md5 : active raid1 sde1[1] sdb1[0]

1068924800 blocks [2/2] [UU]

md6 : active raid1 sdf1[1] sdc1[0]

1171331136 blocks [2/2] [UU]

unused devices: <none>If an MD array is degraded, it looks like this:

md5 : active raid1 sdb1[0]

1068924800 blocks [2/1] [U_]

Alerting: mdmonitor

The raid feature will enter the mail address to send problem reports in /etc/mdadm.conf and turn on the mdmonitor service if it detects any devices in /proc/mdstat. If a device fails, it will send mails like this one:

From: mdadm monitoring <root@iole1.ifh.de> To: ... Subject: Fail event on /dev/md4:iole1.ifh.de This is an automatically generated mail message from mdadm running on iole1.ifh.de A Fail event had been detected on md device /dev/md4. It could be related to component device /dev/sda6. Faithfully yours, etc.

This could be improved by specifying a program digesting MD events (see mdadm(8)). It also tends to send more mails than necessary, so it probably should not send mail to the request tracker.

Rebuilding

|

This is what /proc/mdstat on iole2 (iole1 is similar) looked after a shutdown of the raid-iole1 controller, followed by a controller reset a few minutes later:

Personalities : [raid1]

read_ahead 1024 sectors

Event: 12

md0 : active raid1 sdd1[1] sda1[0](F)

264960 blocks [2/1] [_U]

md3 : active raid1 sdd2[1] sda2[0](F)

10482304 blocks [2/1] [_U]

md2 : active raid1 sdd3[1] sda3[0]

2096384 blocks [2/2] [UU]

md1 : active raid1 sdd5[1] sda5[0](F)

1052160 blocks [2/1] [_U]

md4 : active raid1 sdd6[1] sda6[0](F)

88501952 blocks [2/1] [_U]

md5 : active raid1 sde1[1] sdb1[0]

1068924800 blocks [2/2] [UU]

md6 : active raid1 sdf1[1] sdc1[0](F)

1171331136 blocks [2/1] [_U]

unused devices: <none>Access on the SCSI level was re-established without any problems. The devices were not removed from the list of targets. Notice that not all software mirrors are degraded: If no access is intended during the downtime of a controller, the mirror will survive. (The failure of md0 = /boot above was forced for the test).

To rebuild the mirrors, all devices that failed (and are available again), have to be hot-removed from the mirrors and the hot-added again:

[iole2] ~ # mdadm -a /dev/md0 /dev/sda1 mdadm: hot add failed for /dev/sda1: Device or resource busy [iole2] ~ # mdadm -r /dev/md0 /dev/sda1 mdadm: hot removed /dev/sda1 [iole2] ~ # mdadm -a /dev/md0 /dev/sda1 mdadm: hot added /dev/sda1

If you see device or resource busy errors upon hot-add, you probably forgot the hot-remove. Repeat this for all degraded mirrors. They will be rebuilt one by one. /proc/mdstat will look like this after all failed devices have been re-added but some have not been resynced yet:

Personalities : [raid1]

read_ahead 1024 sectors

Event: 29

md0 : active raid1 sda1[0] sdd1[1]

264960 blocks [2/2] [UU]

md3 : active raid1 sda2[0] sdd2[1]

10482304 blocks [2/2] [UU]

md2 : active raid1 sdd3[1] sda3[0]

2096384 blocks [2/2] [UU]

md1 : active raid1 sda5[0] sdd5[1]

1052160 blocks [2/2] [UU]

md4 : active raid1 sda6[2] sdd6[1]

88501952 blocks [2/1] [_U]

[====>................] recovery = 23.8% (21089984/88501952) finish=73.5min speed=15264K/sec

md5 : active raid1 sde1[1] sdb1[0]

1068924800 blocks [2/2] [UU]

md6 : active raid1 sdc1[2] sdf1[1]

1171331136 blocks [2/1] [_U]

unused devices: <none>

Performance

Rebuild Speed Limit

The md driver will limit the rebuild speed to 10 MB/s by default, to spare some bandwidth for I/O. To speed this up, run something like

echo 50000 >>| /proc/sys/dev/raid/speed_limit_max

SCSI transfer speed

The Infortrend A16U-G1A3 can do 160 MB/s. To check that this speed is used:

on the controller (menu view and edit channels), check that

DefSynClk = CurSynClk = 80.00 MHz

DefWid = CurWid = Wide

on the host (files /proc/scsi/aic79xx/[01]), check that for all targets:

Goal = Curr = 160.000MB/s transfers (80.000MHz DT, 16bit)

After an incident, you may see something different, like this:Target 1 Negotiation Settings User: 320.000MB/s transfers (160.000MHz DT|IU|RTI|QAS, 16bit) Goal: 35.714MB/s transfers (17.857MHz, 16bit) Curr: 35.714MB/s transfers (17.857MHz, 16bit) Transmission Errors 19 Channel A Target 1 Lun 0 Settings Commands Queued 1446264 Commands Active 3 Command Openings 23 Max Tagged Openings 32 Device Queue Frozen Count 0The only known remedy is to reboot the hosts (can be done independently). Controller resets are not required, but may be a good idea anyway. Of course both hosts have to be shut down then.